Know the Bug Before You Chase It

Before diving into the code, take time to understand exactly what you’re dealing with. Rushing the debugging process without context can lead to wasted time or even misdiagnosed problems.

Replicate the Issue in a Controlled Setting

To begin:

Recreate the issue consistently, preferably in a development or staging environment.

Make sure the environment mirrors production closely enough to surface the bug.

Avoid relying exclusively on anecdotal reports verify them with reproducible cases.

Use Logging and User Reports to Isolate Behavior

Investigation starts with the information you already have:

Review logs for unusual patterns, errors, or anomalies that occurred during the incident.

Gather user reports to identify common conditions (e.g., device, OS, or workflow).

Compare logs from different sessions to locate where the bug diverges from expected behavior.

Identify the Affected Components Clearly

Pinpointing exactly what’s broken is crucial:

Determine which part of the codebase, module, or service is misbehaving.

Distinguish between core logic errors and UI level glitches.

Create a working hypothesis based on symptoms and affected functionality.

Clarity at this stage lays the foundation for a quicker and more effective debugging process. The goal isn’t just to solve the bug, but to understand it before any code is touched.

Scope the Impact

Before diving code first into a bug, stop. Ask one thing: how bad is it? Not every issue is a five alarm fire. Start by defining the severity are we looking at a crash (high priority), a performance delay (nagging but tolerable), or incorrect data (subtle, possibly dangerous)? This quick triage helps focus energy where it counts.

Next, don’t treat the code in isolation. Check dependencies: are other modules or services part of the problem? A glitch in one system might ripple into others which is also where things get messy fast. Mapping those connections keeps your debugging focused and efficient.

Finally, prioritize fixes based on user impact and business logic. A bug no one notices can probably wait. But if the checkout page throws errors or login delays kill conversions, it jumps the queue. Triage ruthlessly, fix deliberately.

Smart debugging starts with knowing what truly matters.

Set Up Your Debugging Environment

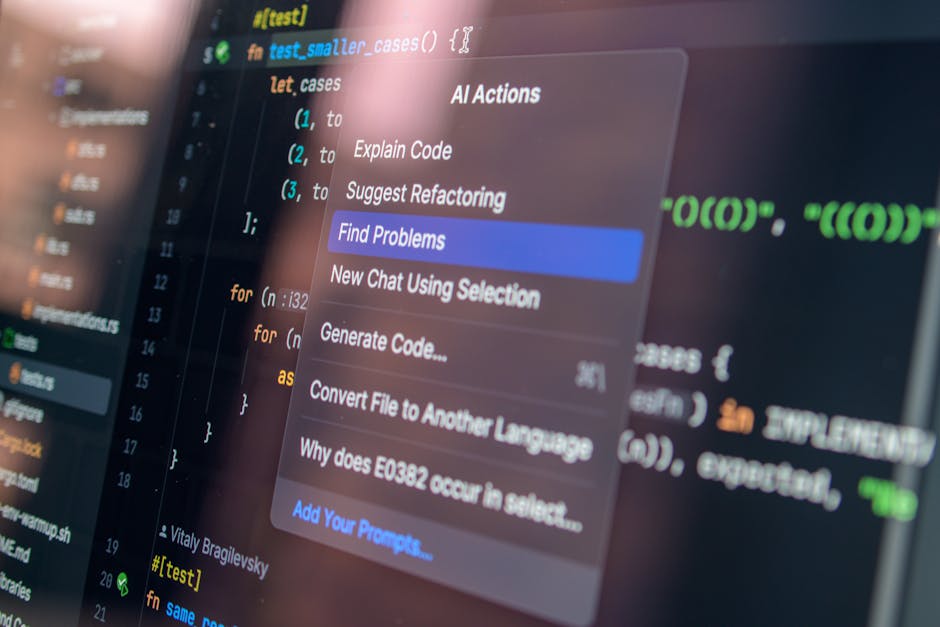

Start by choosing tools that speak your language literally. If your stack is Node.js, don’t waste time with Java specific profilers. Pick debuggers that integrate cleanly with your IDE, logging solutions that don’t swallow timestamps, and monitoring tools that show a clear stack trace with minimal fluff.

Breakpoints aren’t optional. Set them with intent. Watch variables like a hawk. Use live debugging sessions when possible so you don’t burn deployment cycles chasing shadows. These are your eyes and ears when the bug doesn’t want to be seen.

Version everything. All of it. Dotenv files, debug config files, test scripts. You shouldn’t be trying to remember what worked between Tuesday and Wednesday. If it’s not tracked, it will break and someone will blame you.

Keep your environment sharp and battle ready. Messy setups spawn messy results. Clean tooling leads to faster kills.

(See: protect debugging workflows)

Reproduce, Trace, and Pinpoint

When you’re knee deep in a mystery bug, clarity is everything. First move: trace the execution path that leads up to the failure. Step through the code strategically. You’re not scanning the whole codebase you’re building a breadcrumb trail. Start where the failure surfaces and work backward until you find the origin, not just the symptom.

Next, get serious about logs. Not just reading them writing better ones. Good logs don’t just tell you what happened, they tell you why. Timestamped outputs, error context, and edge case traces can be the difference between guessing and knowing. Pair that with infrastructure or app monitoring tools to see what the system was doing at the time of the crash. Was it a spike? A stalled API? Too many threads? Logs don’t lie, if they’re honest in the first place.

Finally, use the divide and conquer method break the system down into smaller pieces. Toggle features off. Strip inputs down. Roll back recent changes in chunks. If the bug vanishes, one of those blocks was to blame. Narrow the field until there’s nowhere left for it to hide. Minimalism wins here: the leaner the test case, the faster the fix.

Patch and Refactor

Fix it fast, but fix it right. Once you’ve pinpointed the bug, the first goal is a minimal, durable patch. No need to rewrite the world just address the root issue cleanly and keep the blast radius small. Think surgical, not sweeping.

Next, zoom out. Run regression tests to make sure your fix didn’t break neighboring code. A patch that solves one bug but spawns three more isn’t progress it’s debt disguised as a deliverable. Unit tests, integration tests, sanity checks this is where they earn their keep.

Finally, take a breath and look long term. Could this part of the codebase be more resilient? Is it brittle by design? If there’s a structural weak spot, note it now even if you can’t tackle it today. A real fix buys time. A thoughtful one sets the team up for fewer fires tomorrow.

Test the Fix

This is where a lot of devs start slacking. Don’t. Tests aren’t optional unit and integration tests are your gatekeepers. They prove your fix actually works and doesn’t break anything else in the process.

Start with unit tests to check the function or component you just touched. Make sure it handles expected, edge, and downright weird inputs. Then run integration tests to verify it plays nice across modules. If your app hits APIs, databases, or third party tools test those connections too.

Next, get out of the lab. Simulate real world use. Run on different devices, in various geos, with throttled connections or accessibility tools active. That’s the grit where bugs often hide.

Finally, don’t assume. Confirm the bug you fixed actually behaves differently now or better yet, doesn’t show up at all. No change? You didn’t fix it you just moved it. Back to the editor.

Deploy with Care

Shipping a fix isn’t the end it’s the stress test. For high impact bugs, a blue green or staged deployment is standard. It’s controlled, safer, and gives you a rollback path if things sour. Roll out to a slice of traffic or select users first. Let it breathe. Monitor closely.

Logs, error rates, and user behavior after deployment are your early warning system. Don’t just look for crashes watch for slowdowns, skipped features, or weird usage drops. These can be more subtle but just as damaging.

And if the numbers start looking ugly, don’t overthink it rollback fast. Pride delays cause damage. A fast revert beats a late apology. This stage isn’t about confidence, it’s about being ready when confidence wavers.

Log It, Learn From It

Debugging doesn’t end when the bug is fixed. To avoid repeating the same mistakes and to improve the entire development process it’s critical to document what happened, how it was resolved, and what the team can do better next time.

Capture the Root Cause

A clear historical record of the bug helps:

Train new developers

Improve debugging speed for similar issues in the future

Identify recurring failure patterns in the codebase

Document the following:

What triggered the bug (edge case, regression, user action, etc.)

Affected systems or modules

Steps taken to isolate and resolve the issue

Long term changes made, if any

Feed Lessons into Retrospectives

Once documented, bring those insights into your dev team’s regular retrospectives:

Ask: Could this have been caught with better tests or earlier validation?

Share tools or scripts that helped identify the bug

Use the findings to update coding standards, review checklists, or onboarding docs

Secure the Debugging Pipeline

Beyond learning, make sure the debugging process itself is protected and repeatable.

Lock down production logs and monitoring tools with proper access controls

Version all debugging tools and environment configurations

(More on securing the process: protect debugging workflows)

Make Debugging a Culture, Not an Emergency

Good debugging isn’t an afterthought it’s a habit. Teams that treat it as a back burner task usually pay for it later, in outages no one wants to own. So start by baking root cause investigation into your work cycles. That means setting aside actual sprint time not just hoping someone gets to it between feature requests and bug reports.

Thoroughness should be rewarded more than quick fixes. Fast doesn’t mean better if the issue resurfaces next month. Encourage engineers to trace problems to the bottom, even if it delays a deployment by a day. The trust and stability it builds compounds quickly.

And don’t let wins vanish into silence. If someone finds a clever config issue, a tool that sliced debug time in half, or a way to prevent the same bug from reaching prod again share it. Set up five minute demos during stand ups or drop notes in an internal knowledge base. Debugging should be a team sport, not a solo triage mission.