Setting the Stage

Crash logs aren’t just digital debris they’re storyboards of real failure. Unlike contrived bug examples or textbook exercises, real world crash logs drop you into unfiltered chaos. You get actual runtime conditions, raw stacks, and the fingerprints of an issue that mattered enough to break something in production. That’s why they’re so useful. They’re not just about what went wrong they show how, when, and under what exact pressure things snapped.

This walkthrough isn’t here to wrap the bug in a bow. It’s here to make developers better. Debugging isn’t magic; it’s method. By unpacking one gnarly crash log line by line, we’ll sharpen our instincts spotting patterns, asking better questions, and getting faster at zeroing in on the root.

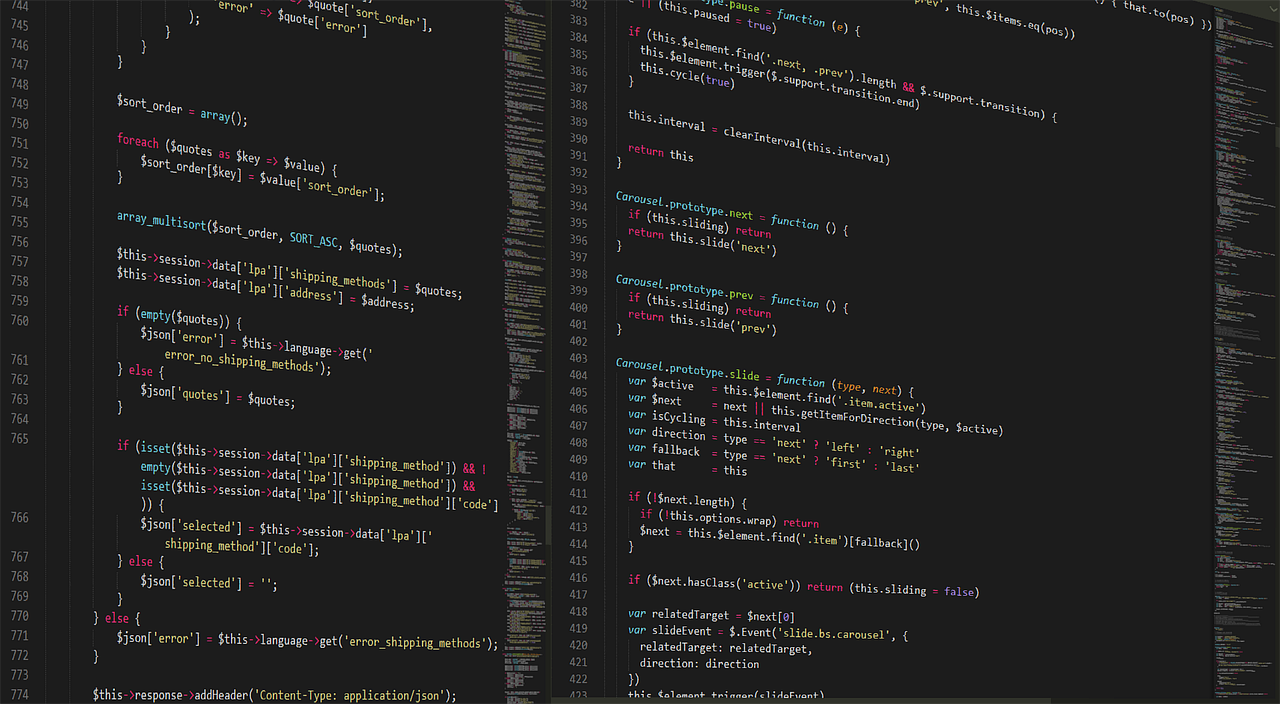

The system we’re diving into? A Linux based microservice written in Go, tied into a containerized backend running in Kubernetes. Stack includes gRPC, Redis, and some light C interaction via cgo. The crash itself? A messy slice bounds panic triggered during concurrent request handling. It isn’t pretty. But that’s the point.

Reading the Log Like a Human (Not Just a Machine)

When a crash hits, the first clue is usually the signal. Think SEGFAULT (segmentation fault), panic (often seen in Go or Rust), or an assertion failure each tells you something slightly different about what just exploded. SEGFAULT? You probably dereferenced memory you had no right touching. Panic? Something went off the rails, and the program decided it was safer to bail. Assertion failures are a bit louder they’re the code yelling, “This should never happen.”

Once you’ve locked onto the signal, the next step is walking the stack trace like a crime scene. Start from the top: what function was the program in when it crashed? Then work your way down the list. Don’t skim. Look for your code library calls are often just noise. Your money is in the application level function calls leading up to the crash.

Strip out generic system frames, async wrappers, debug hooks anything that’s not directly involved in the flow. What’s left should tell you: what broke, where it broke, and maybe even why it broke. If the trace includes the line numbers, that’s jackpot. But even without them, you’re building a trail of breadcrumbs back to the bug’s front door.

This isn’t magic it’s triage and detective work. The cleaner and more focused your reading, the faster you’ll fix things.

Stepping Through the Suspect Code

It starts with the stack trace. That messy list of function calls isn’t just noise it’s your breadcrumb trail. Grab the topmost frame that belongs to your codebase (not some libc internals or third party library). That line is ground zero. Pull up the corresponding snippet, match the program counter or instruction offset, and drop into your IDE. Now you’re not guessing you’re hunting something real.

But a crash log won’t hand over context. To understand what went wrong, you’ve got to mentally walk the call path. What were the inputs? Who called this function, and under what conditions? Was the object initialized? Did that pointer come from an allocation that might’ve already been freed? At this point, reading code is only half the job. Reconstructing the state of key variables is the other half. That might mean digging into logs, checking earlier stack frames, or even throwing in print statements during a test rerun.

And remember, the stack trace tells you where the crash happened not always why. Sometimes the real failure occurred several calls before, when some variable quietly got corrupted. Maybe an array went one index too far four frames up. Maybe you stepped into a race condition masked 99 times out of 100. So don’t trust the trace blindly. Sweat the details, read side effects, and question everything. The log gives you a spotlight, not the full stage.

Common Culprits: Memory Mishaps and Concurrency Chaos

Uninitialized pointers, null references, and dangling memory aren’t just textbook landmines they show up with real consequences in production logs. Trouble is, they rarely announce themselves directly. Instead, you’ll spot vague crashes: segfaults in seemingly innocent lines of code, backtraces pointing somewhere “safe,” or unexplained crashes in stable builds. These logs are often the smoke, not the fire.

One common pattern: you see a segmentation fault in a utility function that doesn’t deal with memory allocation. What’s really happening? A shared object was modified or half deleted by another thread just microseconds before your call. The crash points at the final straw, not the thread that broke the camel’s back.

Take a classic case from a multi threaded file parser. An engineer noticed periodic crashes with a top of stack memcpy call. Standard review showed nothing wrong. But flipping through prior logs revealed inconsistent object states sometimes the parser cache was null, other times just stale. The culprit? A race between cleanup and reuse. Once the team added timestamp based guards and locked access, the crashing stopped.

The takeaway: when logs point to memory issues, don’t fixate on the failing line. Step back. Ask who touched the data and when. Track object lifecycles. And most of all log consistently, because patterns matter more than single entries.

For a comprehensive breakdown of how race conditions sneak into production and how to trace them back, check out Understanding Race Conditions Through Practical Examples.

Lessons for 2026 Dev Teams

Logging Smarter: What’s Worth Capturing

Crash logs are only as helpful as the logging strategy behind them. Modern debugging expects more than a generic stack trace you need actionable context. Here’s what smart logging in 2026 should include:

Timestamps tied to user and system actions

Input parameters leading into the failed section

Environment data (OS, version, active feature flags)

Thread identifiers to clarify concurrent activity

Custom breadcrumbs showing recent internal events

Prioritize clarity over volume. Too much noise can drown out the clue you’re looking for.

Tools That Augment Human Debugging

Identifying the crash is just the beginning. These tools extend your debugging superpowers:

Auto symbolication: Automatically translates memory addresses into human readable function names, file paths, and line numbers. Essential for native crashes.

Sentry (or similar platforms): Groups similar errors, preserves user context, and integrates directly with your issue tracker.

LLDB / GDB: Low level debuggers that let you inspect program memory, thread states, and call stacks in real time. Especially vital for C, C++, and Rust based systems.

Static analyzers: Catch issues like null dereferences or unprotected access before they crash in production.

Interactive crash replay: Tools that let teams recreate the context of a crash post mortem, like rr (record & replay) for native apps.

From Blame to Collaboration

Crash logs should never become evidence in a blame game. Instead, use them as opportunities to improve team resilience.

Share logs and findings during retrospectives or post mortems

Tag logs with affected modules or features not people

Keep discussions focused on what happened, not who missed it

By treating crash logs as shared learning resources, teams debug faster, code smarter, and build a culture of psychological safety around failure.

Final Checklist Before You Submit the Patch

Before closing the book on a crash, you need to get methodical. Step one: reproduce the issue. If it happens consistently, strip the system down to the failing conditions and record everything inputs, environment, steps. If it’s non deterministic, explain why. Was it a race? An edge case in scheduling? Either way, document how you hunted it down. This becomes the bread crumb trail someone else will thank you for later.

Next, regression tests. Not just one, if you can help it. Cover the core path where the bug was found, plus any adjacent logic that might also be shaky. This is especially important when the fix isn’t just a local patch but touches shared modules. You’re not just proving that the bad behavior is gone you’re building a safety net for six months from now.

Finally, put together a quick post mortem. Doesn’t have to be fancy a few bullets in a team doc or a slide in sprint review. What failed, what fixed it, what we learned. Share the log snippet that mattered. Mention the tool or hunch that cracked it. This turns a stressful debug cycle into institutional knowledge not tribal wisdom locked in someone’s head.